Drilling down

When using an API the consumer generally wants to be able to:

- Access a subset of a large collection of resources (paging)

- Order the set of resources they get back.

- Filter the resources returned based on some criteria.

- Aggregate across the resources matching the filter (at the very least get a total count back)

Enterprise Tester aims to provide ways to achieve all of this - the approach we took was fairly pragmatic:

- Leverage existing query language investments (we have a query language in our application already, it makes sense to also expose this in the API)

- For anything else, use the OData $filter functionality to filter it (we didn't want to invent another query language)

TQL Support

Query Language

TQL (Testing Query Language) is a Domain Specific Query language developed for searching and aggregating test information - and worth a series of posts all on it's own - but was definitely an existing investment we wanted to leverage when building out the products API.

TQL Queries can be quite simple:

Status = Open

Or quite complex (the below query would find any requirements which are associated (indirectly) to bugs with a Resolution of 'Wont Fix' raised in the last week).

EntityType = Requirement

AND Relationships IN {

Destination IN {

Type = Bug

AND Resolution = 'Wont Fix'

AND CreatedAt >= "-1 week"

}

}

ORDER BY Package ASC, LastUpdatedAt DESC

The parsing of the Query Language is implemented in F# using FParsec (if you haven't looked at FParsec, then umm.. you should - I can't say enough good things about this library!)

We have not so for had to make any changes to the query language to make it more palatable to consumption from the API - I think a few things worked in our favor there:

- Quoting strings is optional for words not containing whitespace, and you can use single or double quotes.

- Encoding of strings follows the JSON conventions for escaping etc.

- When implementing the parser we ensured it was whitespace insensitive - so the above query can also just be written on a single line.

- We did not use symbols for AND and OR logical operators - so we avoided using ampersands [&] for AND

- Having the query include ordering info avoided the need for a second order/sort query parameter

This allowed us to make it easy to search via the API without having to URL encode the query parameter in many cases.

Working against our favor is Lucene itself - the query language allows performing a contains search using a Tilde (~) operator:

Name ~ "defect bug"

Within the string being searched for we support the use of Lucene query parser syntax:

Name ~ "te?t" AND Description ~ "'REST API' && 'Curl Example'"

This can trip up people experimenting with the API directly within a browser, where in some cases not escaping these characters correctly can result in part of their query being parsed as a parameter - so far this hasn't really proven to be much of an issue.

Controller

The implementation of a controller taking a TQL query was fairly simple:

public HttpResponseMessage Get(string tql = null)

{

QueryResults results = _entitySearcher.Search(Request.RequestUri, tql, Skip ?? 0, Top ?? DefaultTopSearchResults);

List wrapped = results.Items.Select(Wrap).ToList();

var wrappedResults = new QueryResults

{

Items = wrapped

};

if (!NoInlineCount)

{

wrappedResults.Skip = results.Skip;

wrappedResults.Top = results.Top;

wrappedResults.Total = results.Total;

}

HttpResponseMessage response = Request.CreateResponse(HttpStatusCode.OK, wrappedResults);

return response;

}

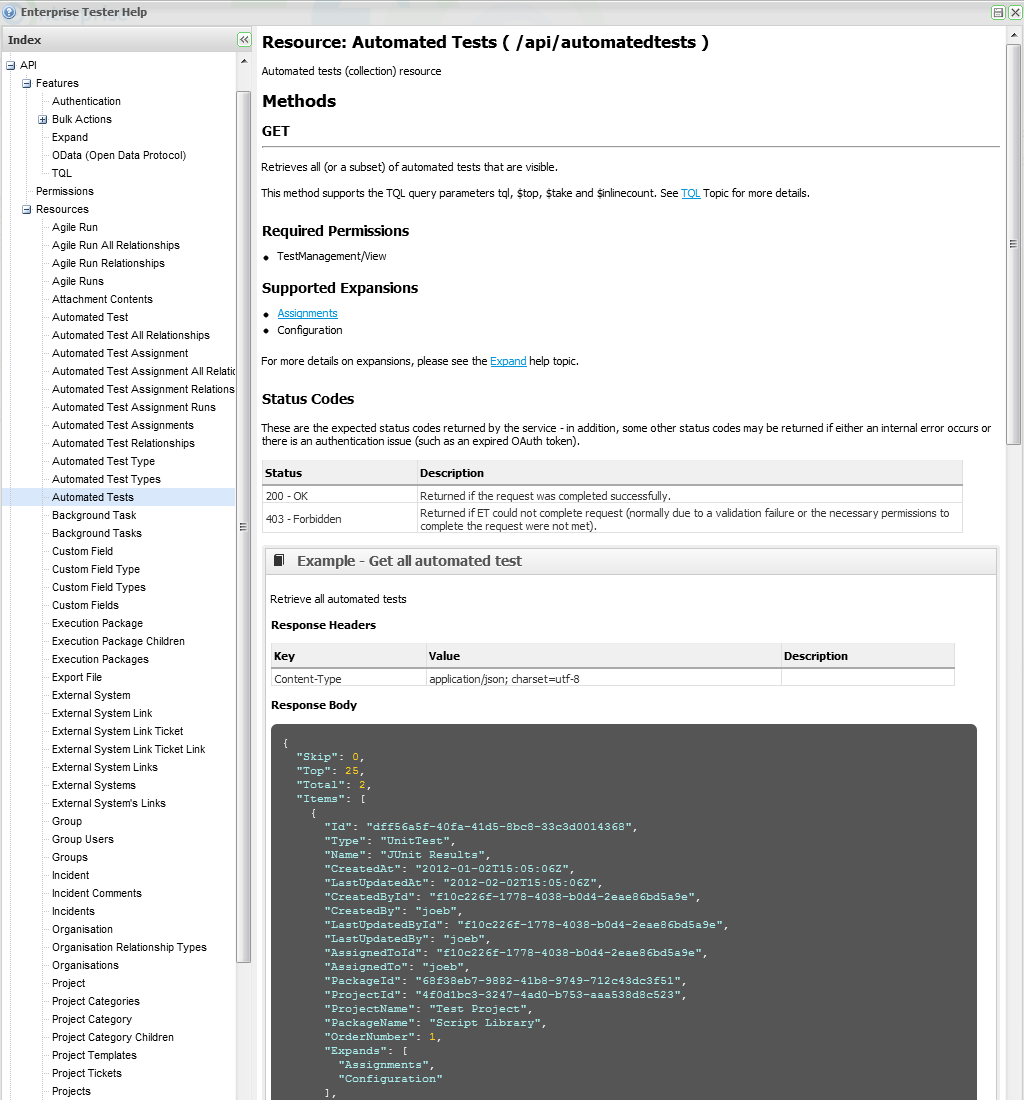

The implementation defaults to including skip/top/total as well as next/prev/first/last links in the response - but we did provide a way for client consumer to excluded that information if they so desired (i.e. if implementing a search which is guaranteed to return 1 result) - by passing in the OData $inlinecount query parameter.

GET /api/automatedtests?tql=Name~Selenium&$inlinecount=none

We also exposed a search method, allowing you to execute TQL queries to search across all the entity types at once, implementation of the controller there was similar - one thing we did do is leverage the "Expansions" dictionary every view model has to embellish it with the type of the search result (as a property called "EntityType").

wrappedResults.Items = results.Items.Select(result =>

{

object mapped = _viewModelMapper.MapSearchResult(result, Expands);

string type = QueryUtility.FormatTypeForDisplay(result.GetUnproxiedType());

((AbstractModel) mapped).Expansions.Add("EntityType", type);

return mapped;

}).ToList();

In the case of search results we are dealing directly with NHibernate entities, which can be proxies - thus the call to .GetUnproxiedType().

OData

I always feel a little disingenuous referring to OData in our API docs - but unfortunately I don't know of a good term for what we are doing.

Our support for OData extends as far as filtering a set of results (GET requests) and goes no further - we certainly did not build an OData compliant API, or ensure the shape of our results conformed to something an OData consumer may expect.

The filtering specification outlined in OData though is incredibly useful to avoid inventing yet another query language unnecessarily (and was one of the draw cards for using WebAPI in the first place).

- $expand

- $filter

- $inlinecount

- $orderby

- $skip

- $top

Initially our collection resource GET methods looked like this (or in some cases with additional query parameters to identify the collection owner)

[Queryable]

public IQueryable Get()

{

...

}

But as we moved through the pre-releases for the WebAPI we hit a bit of a snag in that OData support was pulled from the beta - we knew the problem would eventually be rectified, but in the mean time we had code that didn't work any more - so we pulled the necessary pieces that made up OData execution in earlier builds and re-introduced support - so our controllers ended up like this:

public HttpResponseMessage Get()

{

QueryResults results = ODataQueryExecutor.Execute(someQueryable, Request.RequestUri);

HttpResponseMessage response = Request.CreateResponse(HttpStatusCode.OK, results);

return response;

}

And the code to handle executing the OData query and return the results:

public static class ODataQueryExecutor

{

public static QueryResults Execute(IEnumerable items, Uri uri)

{

StructuredQuery structuredQuery = ODataQueryDeserializer.GetStructuredQuery(uri);

IStructuredQueryPart inlineCount = structuredQuery.QueryParts.FirstOrDefault(p => p.QueryOperator == "inlinecount");

var resultsPage = new QueryResults();

if (inlineCount != null && inlineCount.QueryExpression == "allpages")

{

resultsPage.Total = ((IQueryable) ODataQueryDeserializer.Deserialize(items.AsQueryable(), GetQueryWithoutTopOrSkip(structuredQuery).QueryParts)).Count();

resultsPage.Top = TryParseQueryPartAsInt(structuredQuery.QueryParts, "top");

resultsPage.Skip = TryParseQueryPartAsInt(structuredQuery.QueryParts, "skip");

}

resultsPage.Items = ((IQueryable) ODataQueryDeserializer.Deserialize(items.AsQueryable(), structuredQuery.QueryParts)).ToList();

resultsPage.SetSelfAndGenerateLinks(uri);

return resultsPage;

}

This allowed us to just do filtering, and get the results back in our familiar QueryResults form (so we ensure consistency with the equivalent TQL query responses).

Aggregation

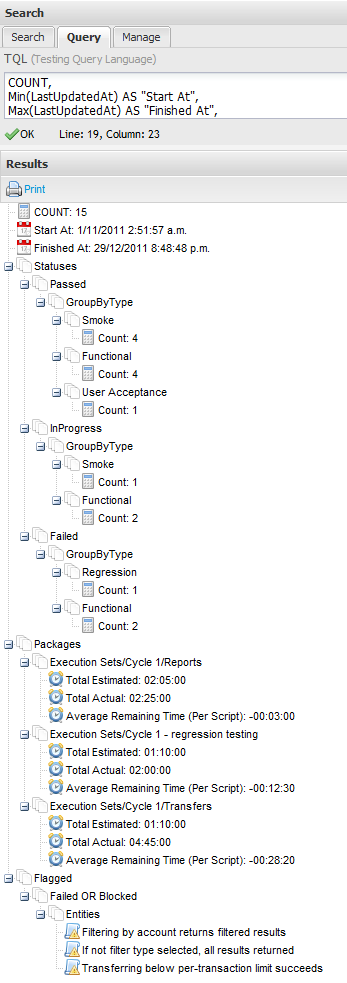

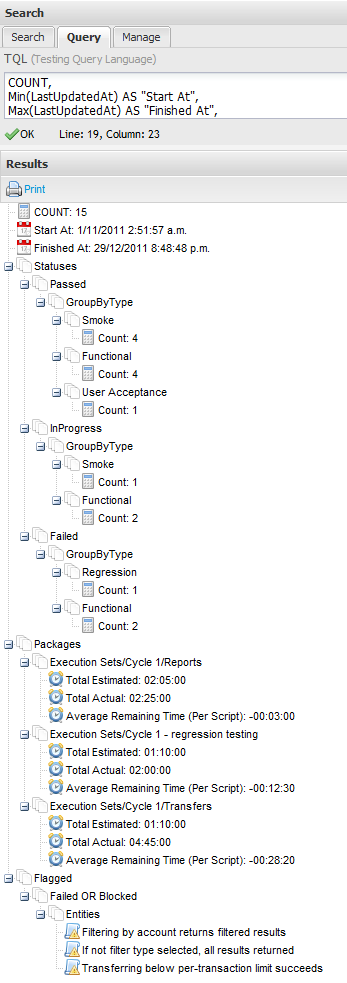

The TQL (Testing Query Language) also features the ability to perform aggregations (Think group by, count, sum, average, faceted querying etc.) - these can be quite complex:

COUNT,

Min(LastUpdatedAt) AS "Start At",

Max(LastUpdatedAt) AS "Finished At",

GROUP BY Status {

GROUP By Type {

Count

}

} AS "Statuses",

GROUP BY Path {

SUM(EstimatedDuration) AS "Total Estimated",

SUM(ActualDuration) AS "Total Actual",

AVG(EstimatedLessActualDuration) AS "Average Remaining Time (Per Script)"

} AS "Packages",

FACETED OrArgs(Equal(Status,Failed), Equal(Status,Blocked)) AS "Failed OR Blocked" {

Entities

} AS "Flagged"

WHERE EntityType = ScriptAssignment

AND Project = 'Project X'

AND Status != NotRun

Which within the application would return a result like this:

And from the API, returns a result like this:

{

"Results": {

"COUNT": 15,

"Start At": "2011-11-01T02:51:57Z",

"Finished At": "2011-12-29T20:48:48Z",

"Statuses": {

"Passed": {

"GroupByType": {

"Smoke": {

"Count": 4

},

"Functional": {

"Count": 4

},

"User Acceptance": {

"Count": 1

}

}

},

"InProgress": {

"GroupByType": {

"Smoke": {

"Count": 1

},

"Functional": {

"Count": 2

}

}

},

"Failed": {

"GroupByType": {

"Regression": {

"Count": 1

},

"Functional": {

"Count": 2

}

}

}

},

"Packages": {

"Execution Sets/Cycle 1/Reports": {

"Total Estimated": "02:05:00",

"Total Actual": "02:25:00",

"Average Remaining Time (Per Script)": "-00:03:00"

},

"Execution Sets/Cycle 1 - regression testing": {

"Total Estimated": "01:10:00",

"Total Actual": "02:00:00",

"Average Remaining Time (Per Script)": "-00:12:30"

},

"Execution Sets/Cycle 1/Transfers": {

"Total Estimated": "01:10:00",

"Total Actual": "04:45:00",

"Average Remaining Time (Per Script)": "-00:28:20"

}

},

"Flagged": {

"Failed OR Blocked": {

"Entities": [

{

"Id": "5b932463-6089-4bc9-9e23-a0b100e133b0",

"EntityType": "ScriptAssignment",

"Self": "http://localhost/EnterpriseTester/api/scriptassignment/5b932463-6089-4bc9-9e23-a0b100e133b0"

},

{

"Id": "39c54b0c-5340-4b93-ace9-a0b100e13519",

"EntityType": "ScriptAssignment",

"Self": "http://localhost/EnterpriseTester/api/scriptassignment/39c54b0c-5340-4b93-ace9-a0b100e13519"

},

{

"Id": "ac8e5dce-322c-4e05-9975-a0b100e13375",

"EntityType": "ScriptAssignment",

"Self": "http://localhost/EnterpriseTester/api/scriptassignment/ac8e5dce-322c-4e05-9975-a0b100e13375"

}

]

}

}

}

}

To achieve this we needed to parse the query to determine if it was an aggregation, and then handle the search query differently in each case:

public HttpResponseMessage Get(string tql = null, string format = null)

{

tql = tql ?? string.Empty;

PresentationContext context = CreatePresentationContext();

EnterpriseTester.Core.Search.AST.Query parsed = _queryParser.ParseQuery(tql);

if (parsed.IsAggregatedExpression && !string.IsNullOrEmpty(tql))

{

return HandleAggregatedExpression(parsed , format, context);

}

return HandleQuery(parsed, format, context);

}

Aggregated search results are generated as a set of nested IDictionary instances - which actually serialize very well to JSON when using JSON.Net - all except our entities...

With the aggregated queries support in TQL, you can use functions such as COUNT, SUM(Field), AVG(Field) and so on... one of these functions Entities - which actually just returns an List of all the entities matching the criteria. This is not something SQL does, but this isn't SQL now is it - so there is nothing stopping us returning an array as opposed to a single value for any node in the tree of results.

By default Entities will stop being collected by the query after there is 25, but the limit can be increased too if necessary. This feature is really useful when combined with a faceted search where expressions and formulas can be used to calculate which entities to include or not, and where you don't expect to get a large number of matching entities.

So within the dictionary of results returned from the TQL query engine for the aggregated query, we may have a list of EntityInfo elements, one for each entity returned from a "Entities" aggregate function.

EntityInfo consisted of the CLR Type and Guid ID for the entity - not something we want to expose in our API (but very useful for other parts of the application) so to overcome this we pass a visitor over the dictionary of results to rewrite these into a form that's palatable for our API consumers:

HttpResponseMessage CreateDefaultAggregatedResponse(IDictionary results)

{

var visitor = new ResolveEntityInfoDictionaryVisitor(entity =>

{

string entityType = QueryUtility.FormatTypeForDisplay(entity.EntityType);

return new Dictionary

{

{"Id", entity.Id},

{"EntityType", entityType},

{AbstractModel.SelfProperty, _viewModelMapper.ResolveUrlForEntityTypeResource(entityType,entity.Id)}

};

});

visitor.Visit(results);

return Request.CreateResponse(HttpStatusCode.OK, new RawAggregatedQueryModel {Results = results});

}

So we ensure those aggregation results now have a URL pointing at the resource for that entity.

Next

Next in part 5 we take a look at how we handled Authentication, including support for Session, Basic and OAuth.